How Do Self-Driving Cars Not Get Lost? Tackling Visual Ambiguity in a World of Twins

- Raghu Ram

- Dec 7, 2025

- 5 min read

The other day me and my colleague were discussing about how difficult it is to find a parked car in a multi-level parking lot because we may forget the floor number, and everything looks identical.

He looked at me and asked me how do you think our ADAS (Advanced Driver-Assistance Systems) vehicle handles it? While I had a few answers in my mind I wanted to write an article about it, and there by research a few more ways that the engineers thought of to solve this problem.

To put this in context, imagine your GPS is failing and you’re cruising through a maze of identical buildings — Now picture a robot facing the same nightmare. This phenomenon when robot is trying to map an environment is called Visual Ambiguity.

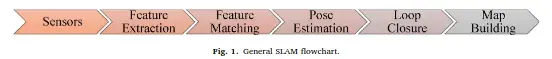

First off let’s understand a little bit of background before we get to this question. If you already know what SLAM (Simultaneous Localization and Mapping) is and how the symmetry affects the robot, feel free to skip to the sections further.

Why do Symmetric structures break robots’ brains?

Suppose we are walking in a new city, and someone asks for our address we look for a landmark to guide ourselves and them to reach our home. The same way when a robot is in an unknown environment and wants to understand it’s location in the map of whole environment it takes help of SLAM algorithm. As the name suggests SLAM refers to plethora of algorithms that Simultaneously map the environment and also localize the robot.

“SLAM aims at building a globally consistent representation of the environment, leveraging both ego-motion measurements and loop closures” [3]

In such cases, the robot makes range measurements through sensors like Camera, LIDAR and in some cases SONAR sensors. Basically, any sensor that can provide attributes of the environment like distance, landmark measurements etc., can be used to perform SLAM [1].

Most of the SLAM algorithms have a Frontend framework and a backend framework. A frontend framework uses sensor information, creates a Map of the environment and optimizes the map of the environment.

Where as the backend framework is more or less consistent for most of the SLAM algorithms. This backend framework infers the data produced by frontend. The backend tries to generate the map and the trajectory of the Robot or Autonomous Vehicle, given the sensor measurements and the control inputs to the robot.

The backend framework feeds back the map generated to Frontend where landmark information is stored, to identify something called a “Loop Closure”. Loop Closure is when the Robot verifies if it has revisited the current environment. The loop closure ensures that the relevance to the driven trajectory and the landmarks is refreshed and any errors in odometry or landmark identification are corrected.

This ensures the accuracy of the map generated and the trajectory traversed.

![Front-end and back-end in a typical SLAM system. The back end can provide feedback to the front-end for loop closure detection and verification. [3]](https://static.wixstatic.com/media/e0ee68_1ecd1b0160384f5d8381a70b09a11263~mv2.webp/v1/fill/w_874,h_333,al_c,q_80,enc_avif,quality_auto/e0ee68_1ecd1b0160384f5d8381a70b09a11263~mv2.webp)

However, when the robot is trying to map the environments which are symmetrical or similar for example identical rooms, corridors etc., the features extracted look identical causing ambiguity in data association (in the front end). This is called perceptual aliasing. This will even make the loop closures to be missed, or mis detected which leads to inconsistent maps. This is even the case for LiDAR based SLAM in case of Repetitive Geometry which can confuse scan matching.

How Visual SLAM researchers tackled this issue?

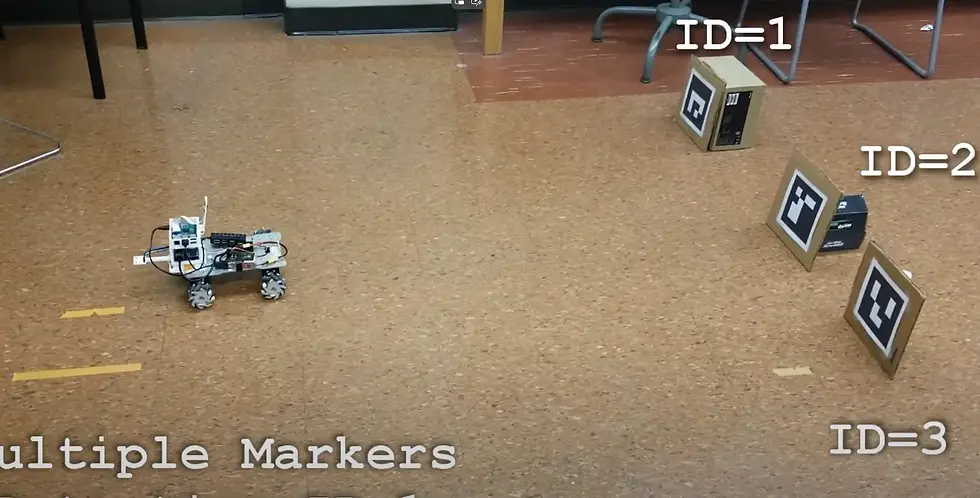

When Visual SLAM, a SLAM approach based on RGB-D & Stereo Cameras, rely on Low Level features such as identified Edges, Contours, Colors and textures, they suffer the problem of perceptual confusion in repetitive environments. Hence one of the approaches is to use artificial landmarks or object features.

Artificial landmarks are physical objects that are placed in environments to be easily detectable and distinguishable by SLAM system. They are recognized by computer vision algorithms and are used to landmark locations during SLAM. Good for indoor lab projects.

Other approaches include using non-visual environmental information like magnetic fields, radio frequency signals. However, these approaches need pre-installation or additional sensor setup.

Some novel techniques involve using semantic information generated by pretrained neural networks like door numbers, shelf numbers, directional signs etc., do distinguish similar areas of the map. A research team [4] used foundational models namely Blip-2 and ChatGPT to add these semantic anchors. They compared their results with the most widely used SLAM algorithm ORBSLAM3 and showcased the improvements.

Another team[5] focuses specifically on recognizing visited locations by creating two separate branches. One branch is Visual Place Recognition branch which identifies the visual features to create a global descriptor to represent image. Then a “Scene Text Spotting” branch (also uses LLM’s) which spots distinct text in a scene and identifies it as a feature to improve place recognition. This approach is more directed towards indoor scenarios.

How LiDAR based SLAM approaches combat Symmetry?

A LiDAR-based SLAM system faces two main issues. First, the descriptor must be rotation invariant and correctly associate even when the viewpoint changes [6]. Second, noise handling is crucial due to variations in resolution. Methods like histogram matching — grouping points in the point cloud into bins based on features such as angles, distances, and elevation — for place recognition do not fully address these challenges.

Scan Context is an approach used to enhance loop closure accuracy [6]. It utilizes a single 3D scan and encodes the entire point cloud into a matrix representing egocentric 2.5D information. This method offers efficient bin encoding, preserves the internal structure of the point cloud, and employs a two-phase matching algorithm. The first phase uses a rotation invariant subdescriptor for a nearest neighbor search, followed by detailed pairwise matching to confirm recognition. The technique is called Scan Context because it encodes the entire LiDAR scan into a 2.5D contextual spatial layout, making it easier to compare scans.

Conclusion: How is the future looking like?

Instead of using a single robot, multiple robots can be used in SLAM, whether for state estimation or mapping an environment. In C-SLAM, multiple self-driving cars or robots share the SLAM process and data. This collective effort helps handle ambiguity by using shared intelligence and unique features of different locations. However, problems like keeping map information consistent, applying robust data association techniques across multiple robots, and dealing with large amounts of sensor data continue to be challenging. Nevertheless, this approach holds promise for addressing visual ambiguity in SLAM algorithms.

The other way could be to use the robot’s true nature of precision like finding weathering of walls, rust on the steel etc., which might be a very fine detail but capturing it and discarding the rest. These can be exploited as landmarks. The possibilities are many, but the underlying fact that needs to be remembered is, even the best algorithms may completely fail in unseen scenarios.

We don’t teach robots only to explore, we teach them to avoid getting lost.

Will robots handle visual ambiguity better by becoming more human? or will human understand robots better enough to device something exclusively for their kind? The question remains open. ;)

References

[1] What is SLAM? by Flyability

Comments